To evaluate it for your application, run with the environment variable CUDA_MODULE_LOADING=LAZY set. Lazy loading is not enabled in the CUDA stack by default in this release. Example application speedup with lazy loadingĪll libraries used with lazy loading must be built with 11.7+ to be eligible. This is overall lower than the total latency without lazy loading. Metric The tradeoff with lazy loading is a minimal amount of latency at the point in the application where the functions are first loaded. If you have operations that are particularly latency-sensitive, you may want to profile your applications. Your existing applications work with lazy loading as-is. From the application development perspective, nothing specific is required to opt into lazy loading. Subsequent CUDA releases have continued to augment and extend it. Lazy loading has been part of CUDA since the 11.7 release. This can result in significant savings, not only of device and host memory, but also in the end-to-end execution time of your algorithms. The default is preemptively loading all the modules the first time a library is initialized. Lazy loading is a technique for delaying the loading of both kernels and CPU-side modules until loading is required by the application.

Launch parameters control membar domains in NVIDIA Hopper GPUs.32x Ultra xMMA (including FP8 and FP16).Many tensor operations are now available through public PTX:.The CUDA and CUDA libraries expose new performance optimizations based on GPU hardware architecture enhancements.ĬUDA 12.0 exposes programmable functionality for many features of the NVIDIA Hopper and NVIDIA Ada Lovelace architectures: NVIDIA Hopper and NVIDIA Ada Lovelace architecture supportĬUDA applications can immediately benefit from increased streaming multiprocessor (SM) counts, higher memory bandwidth, and higher clock rates in new GPU families. CUDA Toolkit 12.0 is available to download. Updated support for the latest Linux versionsįor more information, see CUDA Toolkit 12.0 Release Notes.

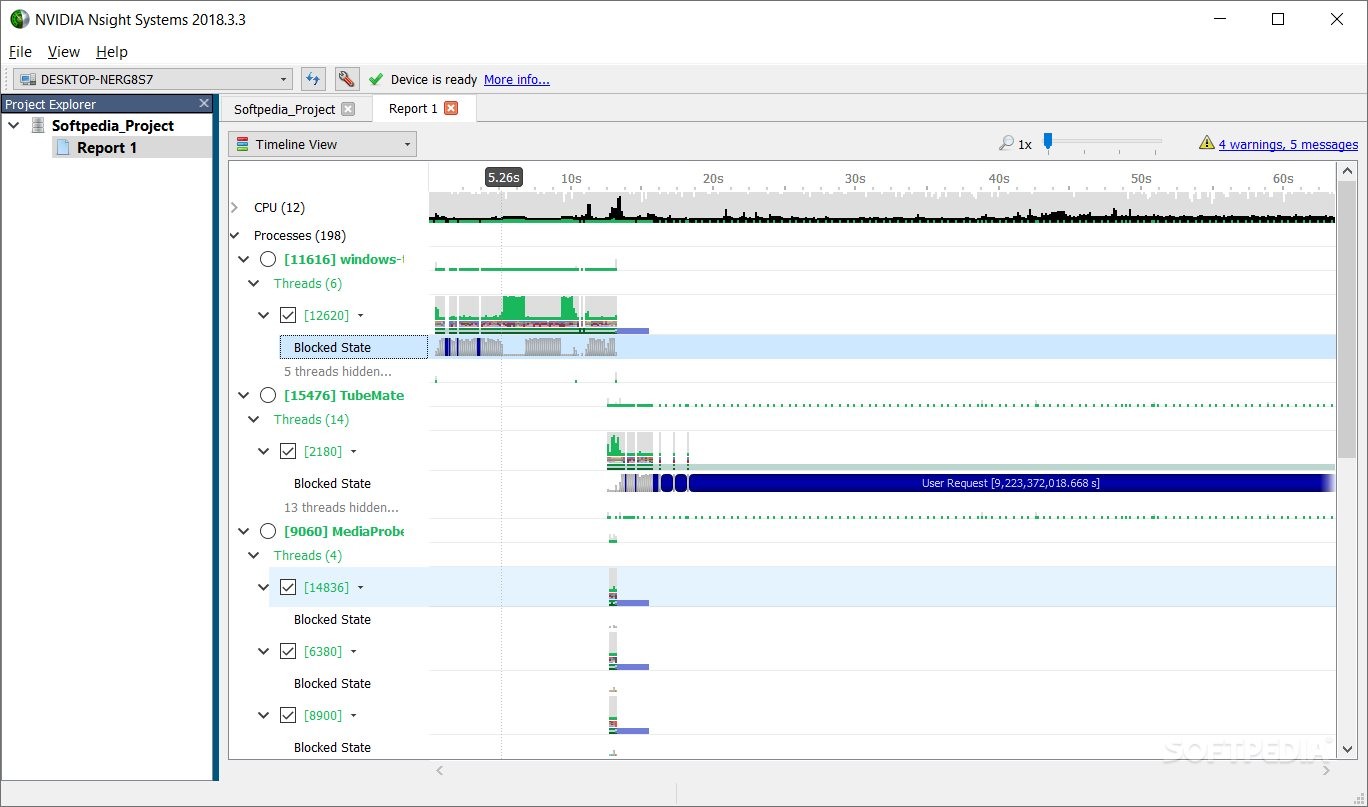

Updates to Nsight Compute and Nsight Systems Developer Tools.Library optimizations and performance improvements.New nvJitLink library in the CUDA Toolkit for JIT LTO.The cudaGraphInstantiate API has been refactored to remove unused parameters.

#Nvidia cuda toolkit compatibility code#

With this ability, user code in kernels can dynamically schedule graph launches, greatly increasing the flexibility of CUDA Graphs.

#Nvidia cuda toolkit compatibility software#

NVIDIA announces the newest CUDA Toolkit software release, 12.0.

0 kommentar(er)

0 kommentar(er)